In November, we announced Power BI’s self-service data preparation capabilities with dataflows, making it possible for business analysts and BI professionals to author and manage complex data prep tasks using familiar self-service tools. Dataflow data can be easily shared across Power BI, allowing business analysts and BI professionals to save time and resources by building on each other’s work, instead of duplicating it, leading to more unified, less siloed data. Learn more about Power BI data prep capabilities here.

Today, we’re excited to announce integration between Power BI dataflows and Azure Data Lake Storage Gen2 (preview), empowering organizations to unify data across Power BI and Azure data services. With this integration, business analysts and BI professionals working in Power BI can easily collaborate with data analysts, engineers, and scientists working in Azure. These new features free valuable time and resources previously spent extracting and unifying data from different sources, so your team can focus on turning data into insights.

Breaking data silos with Azure Data Lake Storage Gen2 and Common Data Model folders

Data is a company’s most valuable asset. Business analysts and data professionals spend a great deal of time and effort extracting data from different sources and getting semantic information about the data, which is often trapped in the business logic that created it, or stored away from the data, making collaboration harder and time to insights longer.

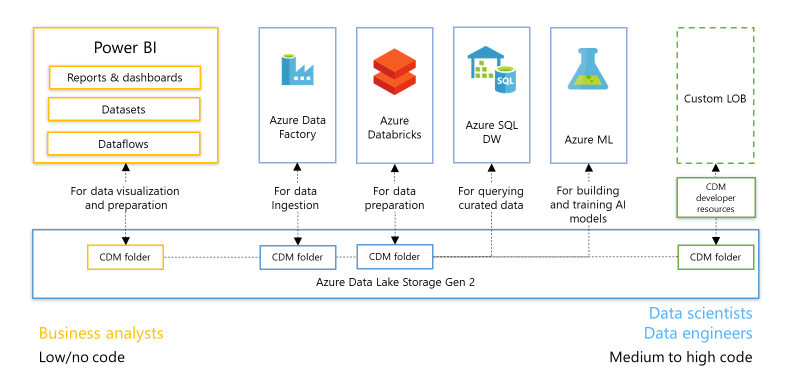

To address these challenges, Power BI and Azure data services have teamed up to leverage Common Data Model (CDM) folders as the standard to store and describe data, with Azure Data Lake Storage as the shared storage layer. CDM folders contain schematized data and metadata in a standardized format, to facilitate data exchange and to enable full interoperability across services that produce or consume data stored in an organization’s Azure Data Lake Storage account.

One of the compelling features of dataflows is the ease with which any authorized Power BI user can build semantic models on top of their data. Because dataflows already store data in CDM folders, the integration between Power BI and Azure Data Lake makes it possible for any authorized person or service to easily leverage dataflow data, using CDM folders as a shared standard.

Furthermore, with the introduction of the CDM folder standard and developer resources, authorized services and people can not only read, but also create and store CDM folders in their organization’s Azure Data Lake Storage account. Once a CDM folder has been created in an organization’s Data Lake Storage account, it can be added to Power BI as a dataflow, so you can build sematic models on top of the data in Power BI, further enrich it, or process it from other dataflows.

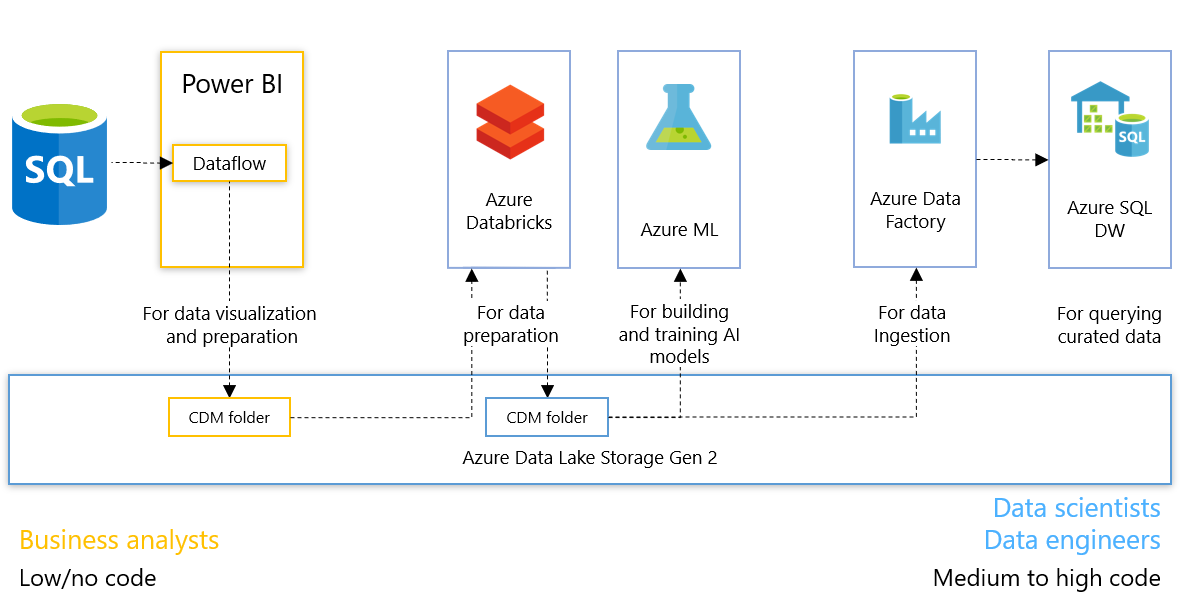

The diagram below showcases a range of services contributing to and leveraging data from CDM folders in a data lake.

Power BI and Azure Data Lake Storage Gen2 Integration features

Power BI customers can now:

- Connect an Azure Data Lake Storage Gen2 account to Power BI

- Configure workspaces to store dataflow definition and data files in CDM folders in Azure Data Lake

- Attach CDM folders created by other services to Power BI as dataflows

- Create datasets, reports, dashboards, and apps using dataflows created from CDM folders in Azure Data Lake

These new Power BI capabilities are available today for Power BI Pro, Power BI Premium and Power BI Embedded customers. All you need to get started is an Azure Data Storage account.

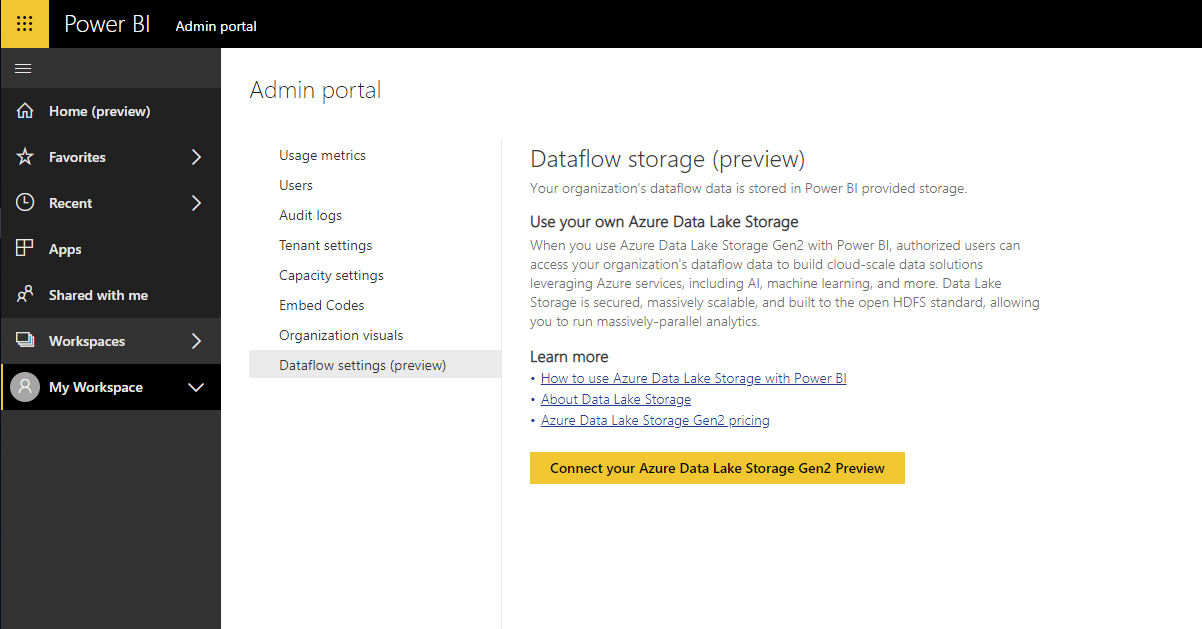

Store dataflow data in your organization’s Azure Data Lake Storage

Before you can start storing Power BI dataflows in your organization’s Azure Data Lake Storage account, your administrator needs to connect an Azure Data Lake Storage account to Power BI. Once connected, Power BI administrators can allow Power BI users to configure their workspaces to use the Azure storage account for dataflow storage.

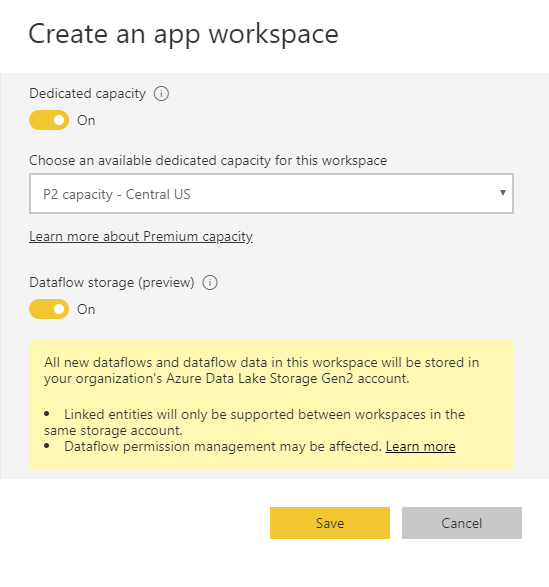

Assign workspaces to your Azure Data Lake Storage Gen2

Once a dataflow storage account has been configured for Power BI and storage assignment permissions have been enabled, workspace admins can configure dataflow storage setting. By default, dataflow definition and data files will be stored in Power BI provided storage. Turn on dataflow storage for your workspace to store dataflows in your organization’s Azure Data Lake Storage:

Once saved, dataflows created in the workspace will store their definition files and data in your organizations Azure Data Lake Storage account.

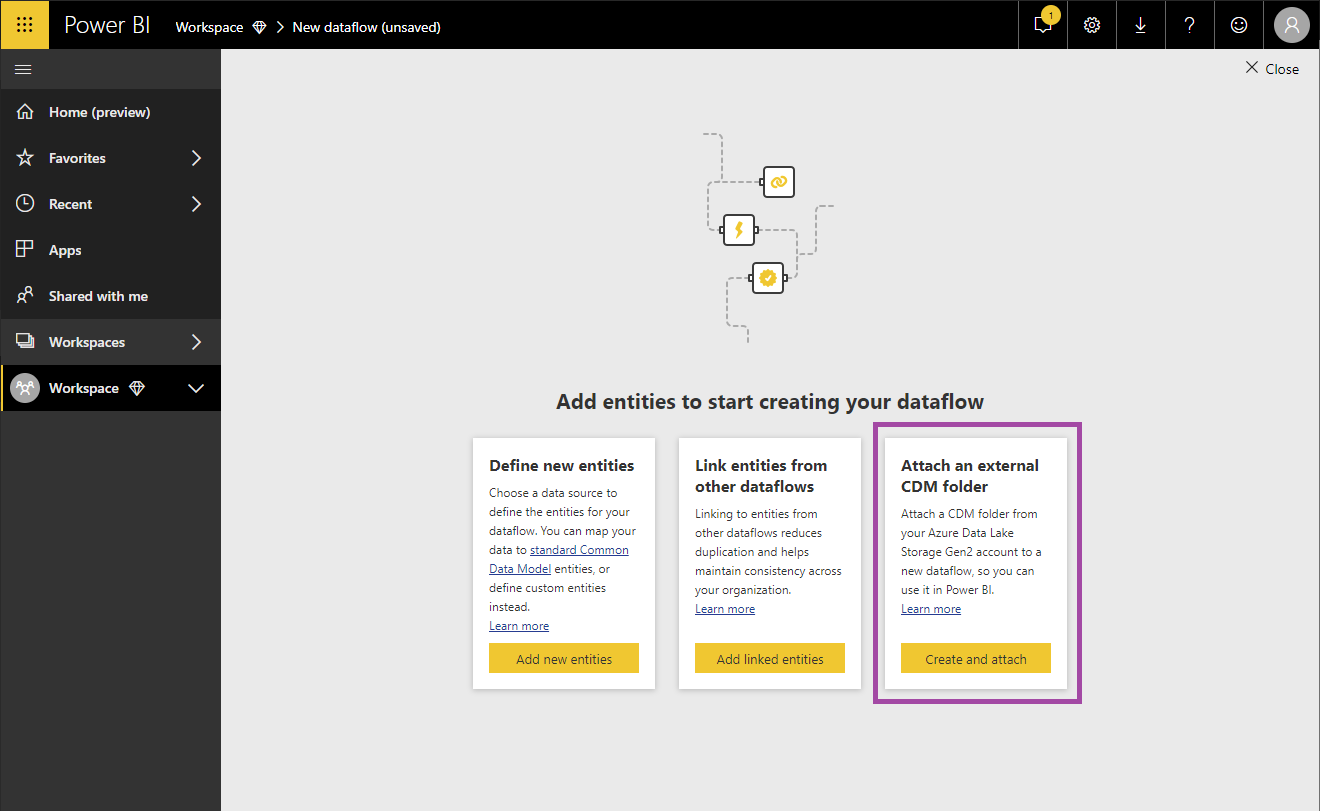

Read data from CDM folders created by other services with Power BI

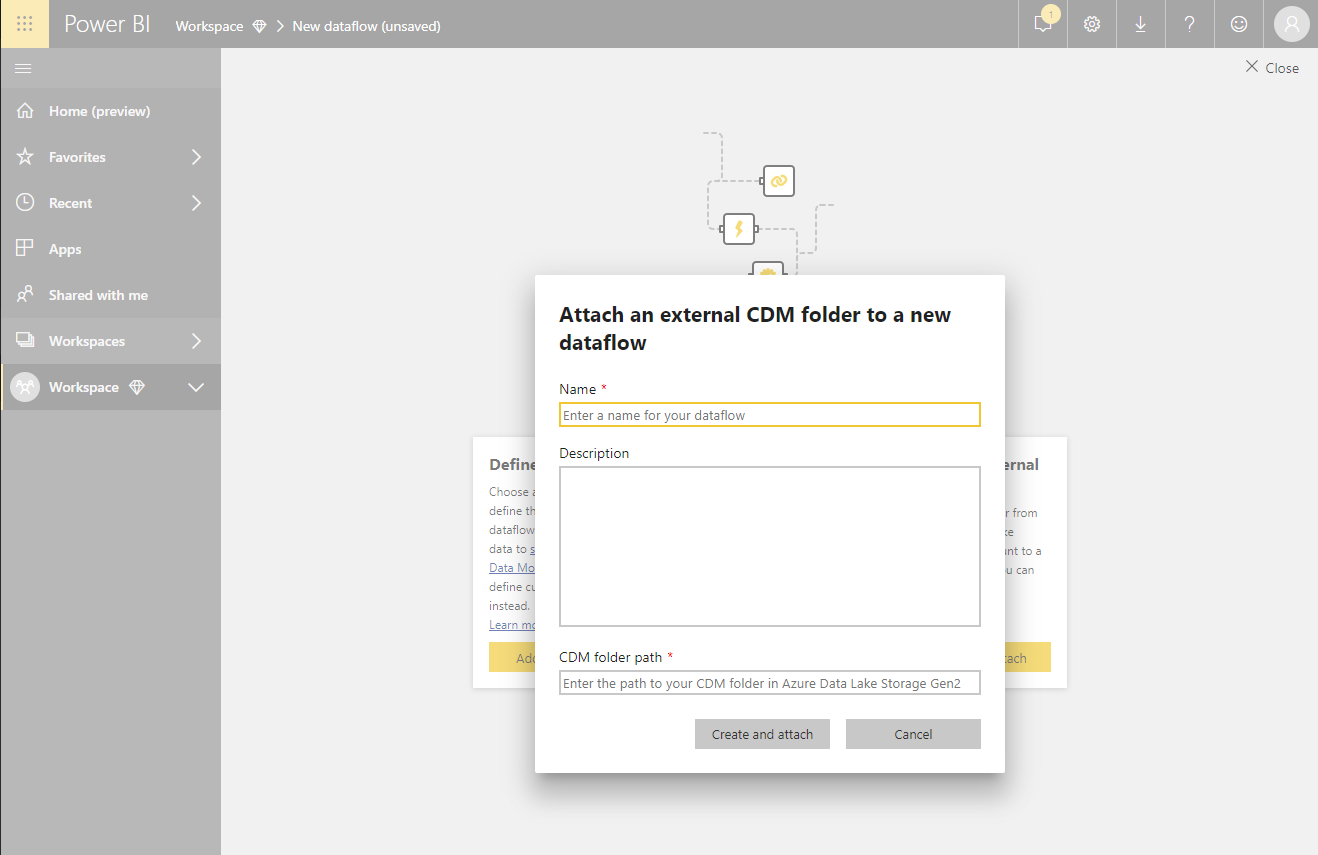

Power BI is only one of the services that can create CDM folders. Azure data services and developer resources can also be used to create and store CDM folders in Azure Data Lake Storage. Once in Data Lake Storage, CDM folders can be easily added to Power BI and used as dataflows—you can use Power BI Desktop and the Power BI service to create datasets, reports, dashboards, and apps using data from the CDM folder, just as you would with a dataflow authored in Power BI. To make this process as simple as possible, we added a new option when creating a new a dataflow in Power BI, allowing you to attach an external CDM folder to a new dataflow:

Adding a CDM folder to Power BI is easy, just provide a name and description for the dataflow and the location of the CDM folder in your Azure Data Lake Storage account:

And that’s it. You can now leverage the Power BI dataflow connector to view the data and schema exactly as you would for any dataflow.

Now available: Power BI, Azure Data and AI integration sample

You can get started with tutorials and samples and learn how data sharing between Power BI and Azure data services using CDM folders can break down data silos and unlock new insights in your organization. In this tutorial, Power BI dataflows are used to ingest key analytics data from the Wide World Importers operational database into the organization’s Azure Data Lake Storage account. Then, Azure Databricks is used to format and prepare data and store it in a new CDM folder in Azure Data Lake. Azure Machine Learning reads data from the CDM folder to train and publish a machine learning model that can be accessed from Power BI, or other applications, to make real-time predictions. In parallel, the data from the CDM folder is loaded into staging tables in an Azure SQL Data Warehouse by Azure Data Factory, where it’s transformed into a dimensional model.

The diagram below illustrates the samples scenario showing how services can interoperate over Azure Data Lake with CDM folders:

Today, Power BI and Azure data services are taking the first steps to enable data exchange and interoperability through the Common Data Model and Azure Data Lake Storage. We are continuously working to add new features. Please visit the Power BI community and share what you’re doing, ask questions, or submit new ideas.

Next steps

- Learn more about how to work with dataflows by reading our documentation.

- Learn about Power BI and Azure Data Lake Storage Gen2 integration concepts, including how Power BI organizes and protects your data in Azure Data Lake Storage

- Read the announcement from our Azure Data Services partners

- Read the Azure Data Lake Storage Gen2 Preview announcement and sign up for the preview

- Learn more about CDM and the ADLS Gen2 and the CDM model file